VRBot - Intro

OVERVIEW

This project was my first solo dive into the wonderful world of virtual reality. I was trying to create a VR-remote-controlled robot that worked in real time. I wanted to be able to strap on the headset, and have live video be displayed in front of me with a control panel that physically controlled a robot in the real world.

LIMITATIONS & CHALLENGES

There were so many things that I was worried about going into this project. I had never done any extensive work in virtual reality, I had never done much in the way of image processing, and I have never been good at figuring out network connections. Along with my lack of knowledge, I was worried that what I was trying to do was not physically possible in VR.

Links:

Code: https://github.com/Tdoe4321/VRBot

Research Paper: https://www.uab.edu/engineering/me/images/Documents/about/early_career_technical_journal/Year_2019_Vol_18-Section3.pdf

GAME PLAN

To see the update, Click Here!

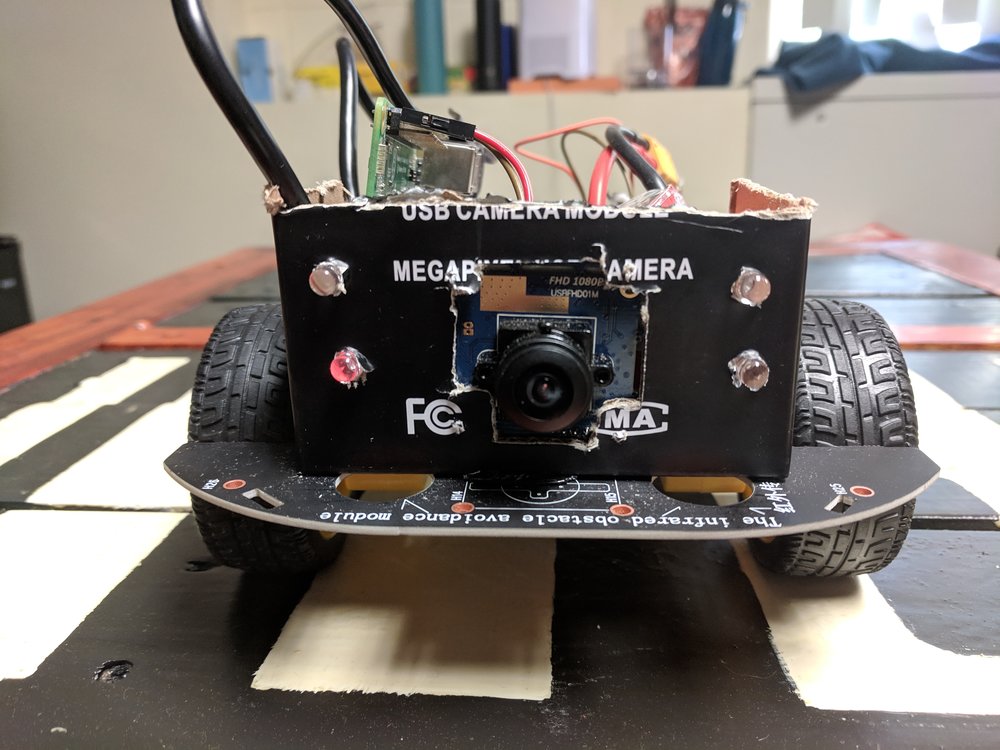

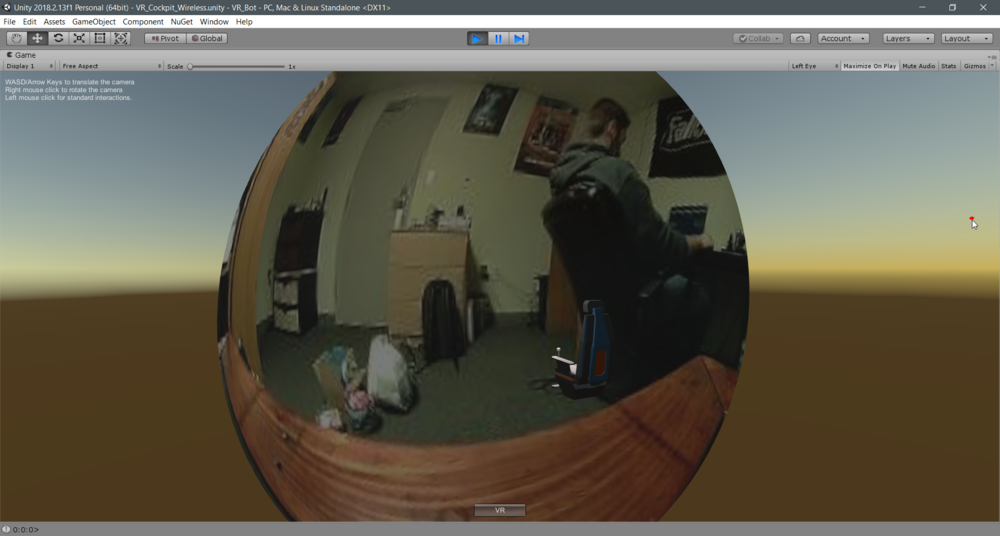

For this project, I think it’s useful to get an understanding of just what all I’m trying to do in a more in-depth sense. Firstly, I was just trying to get a VR environment setup in Unity that I could interact with and reliably use. I wanted to know that I was capable enough with Unity that I knew what I was generally doing. The next step I wanted to accomplish was to get a USB webcam video to show up somewhere in VR in real time. I found a fisheye camera on amazon that I think suited my needs. For the live video to look good, I knew that I wanted the object that the video was displayed on to also be curved, giving the illusion that you were actually inside of a craft controlling it. To do this, I learned how to use Blender, a free 3D creation suite where I created the main ‘window’ that the user sees the video on in VR.

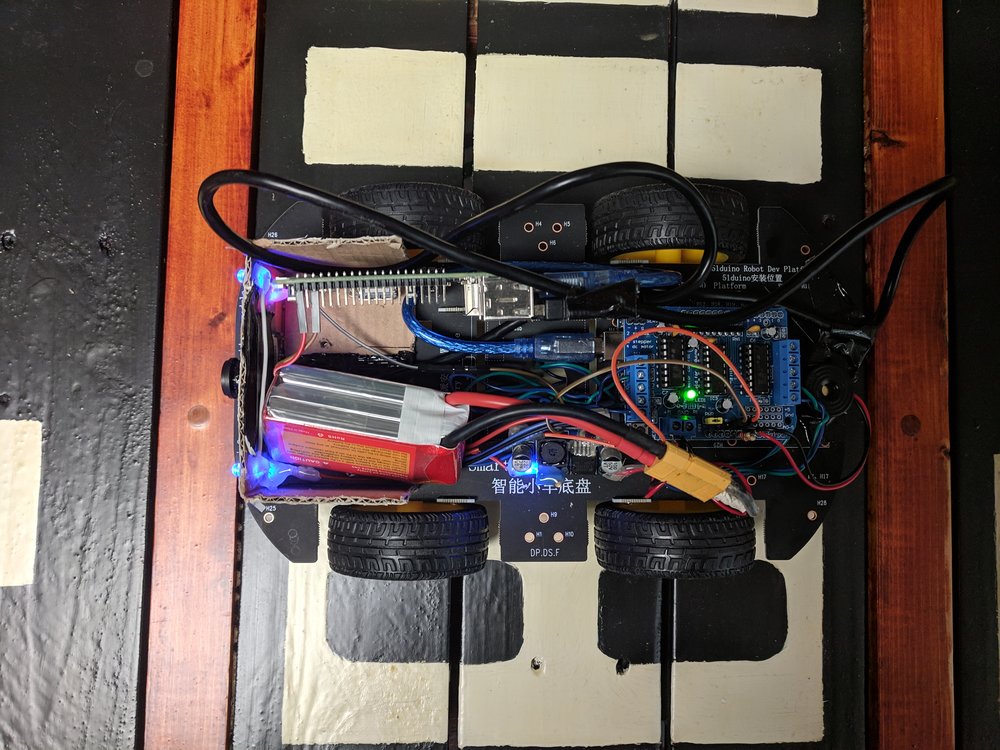

After processing live video, it was time to hook it up to a robot. I chose to just buy a pre-made car kit on amazon because the focus of this project was not on the physical components to much as it was on the software side of things. I also found an old board to drive the motors and hooked it up to an arduino uno. Quickly after testing that I could connect to and send serial commands to the arduino from Unity, I added a VR command station with joysticks to control the two sets of wheels, and now, finally, we have a working prototype.

And my goodness does it work! I was so ecstatic when I first put on the headset and was able to drive around a car, in REAL TIME, though my VR headset with a virtual command console in front of me. It was awesome. But, the biggest downside to this was that it was still tethered to my PC. You had to have a couple extension cables hooked together to be able to move freely, which, as you can imagine, wasn’t great. So, onto the next challenge, wirelessness.

This was easily the hardest, most hair-pulling, deeply infuriating, and, ultimately, totally rewarding parts of the project. I hooked the USB camera up to a raspberry pi with OpenCV on it and went from there. My plan was to use my local router and send the video from the raspberry pi, through the wi-fi, to my router. From the router, I would then pick up the video on my computer running the VR instance connected to the same network. So, this would not work if you’re not connected to the same network, which I was fine with.

I tried many different things with witch to send the video to my computer, but I settled on a library called ZeroMQ. This library makes network connections and interfacing very easy once you get it up and running. For simplicity’s sake, I needed to do two different things. (1) Send the live video from my pi to the VR station, and (2) Send the command from the VR station back to the raspberry pi. I fiddled around (for many, many, many hours) until I found a way to get ZeroMQ working in Unity enough to grab the video and display it. This bi-directional communication is what took by far the longest and was the hardest part of the project. But, I did get it working!

In loose terms, I’ve got a server/client setup. The raspberry pi acts as a server that is constantly pushing out video data to my router. If the VR station is connected to that IP address, then it can pick up the video and send commands back to the PI if it wants to.

So, what is the state of the project right now? It is able to be driven around with VR wireless and even has a horn you can honk. However, to get real-time video working over the network, I had to lower the quality of the video pretty significantly, so it’s not as clear as I would like it to be, but it is definitely drivable.